The key to unlocking efficiency and growth

We’ve got that Knack for any Integration Challenge you throw!

Comprehensive Integration Solutions: Design, Deployment, Security, and Management

We specialize in the comprehensive design, deployment, monitoring, and security of the Integrations, offering flexible solutions for on-premises, cloud, and hybrid environments.

Our collaborative approach ensures tailored solutions that prioritize performance, reliability, and robust security. Elevate your digital experience and transform your technology interactions.

Our core integration competency lies in connecting disparate systems, streamlining information exchange, and enhancing data management throughout your technological landscape. This results in operational efficiency and improved decision-making.

- Adaptable Environments: Ideal for on-premises, cloud, or hybrid setups.

- Proven Methodologies: Custom solutions backed by proven methods.

- Performance and Reliability: Ensuring unparalleled performance and reliability.

- Security Priority: Emphasizing security in every solution.

- Legacy System

- EAI

- B2B Integration

- Third-Party Integration

- Strategic Planning: We map the integration journey to align with your goals.

- Technical Expertise: Our skilled team ensures seamless integration.

- Security Assurance: Rigorous measures protect your data at every step

- Continuous Support: Ongoing commitment for system success.

Open-Source Integration – Apache Camel, Camel K, Airflow Traditional – webMethods, Boomi, and mulesoft, tibco

Technology Partner- Software AG,Boomi, Messaging layer- Kafka and confluent,

Coherent, Enoviq,

Explore Different Types of Digital Integration

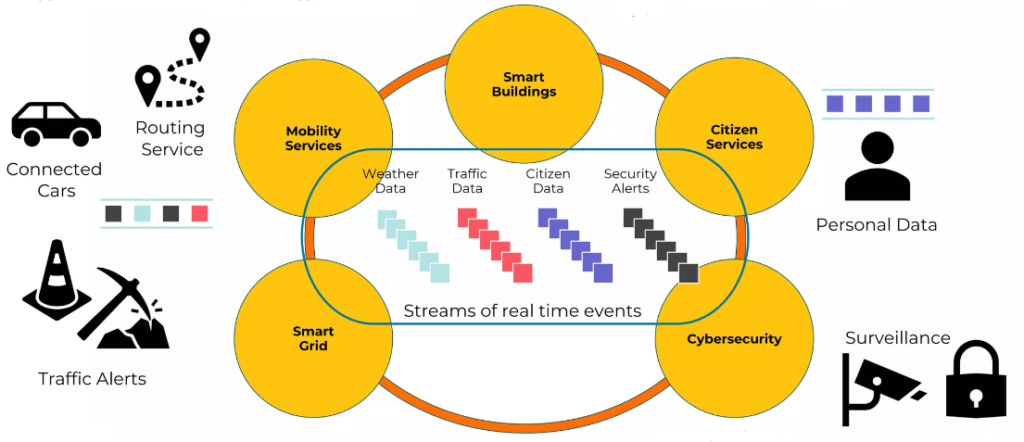

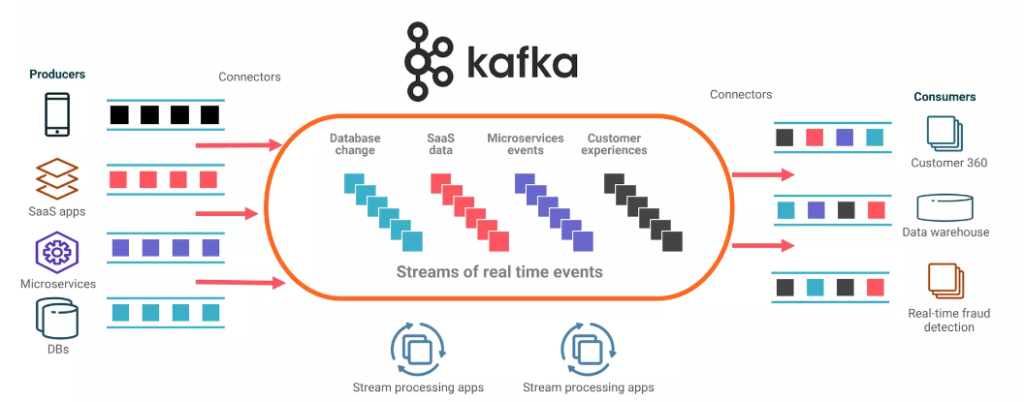

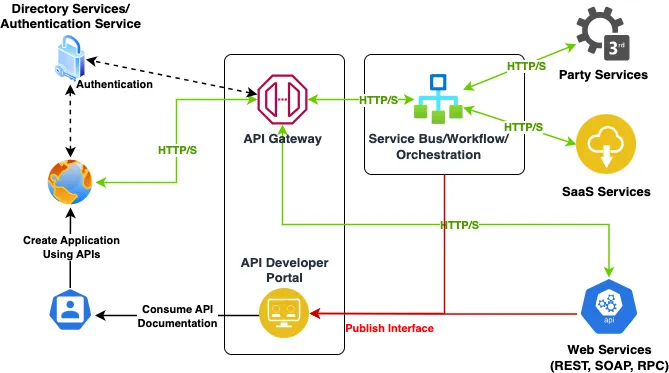

Application Integration

Application integration connects different software applications and systems to enable them to work together seamlessly, share data and leverage each other's functionalities. This integration helps to improve operational efficiency and enhance the overall performance of an organization's IT infrastructure and creates a more unified IT ecosystem where applications can work together seamlessly, improving efficiency, accuracy, and the overall user experience. Modern application integration demands both flexibility and responsiveness. APIs and event-driven architectures excel in these areas, forming a powerful combination to create seamless connections between your software systems.

APIs provide the foundation for structured communication. They allow applications to request data, trigger actions, and share information in a controlled manner. This is essential for core interactions where systems need to directly exchange data or services.

Event-driven architectures introduce real-time responsiveness and adaptability. Applications generate events related to significant changes or actions, and other applications react as needed. This creates a loosely coupled system where changes in one application can automatically trigger actions in others, streamlining processes and enabling timely reactions to business situations.

By using APIs and event-driven approaches together, you achieve the best of both worlds:

- Decoupled Systems:Applications focus on their tasks and react to events, reducing complex dependencies.

- Real-Time Response:Systems react quickly to events, improving customer experience and business agility.

- Scalability:Handle increasing data and interactions by adding new applications that subscribe to relevant events.

Together, APIs and event-driven architectures pave the way to highly efficient, integrated applications that propel your business forward.

Data Integration

Data Integration involves combining data from different sources into a unified view, making it accessible and usable across an organization. It involves collecting, transforming, and loading data from various sources such as databases, files, applications, and external systems to create a single source of truth.

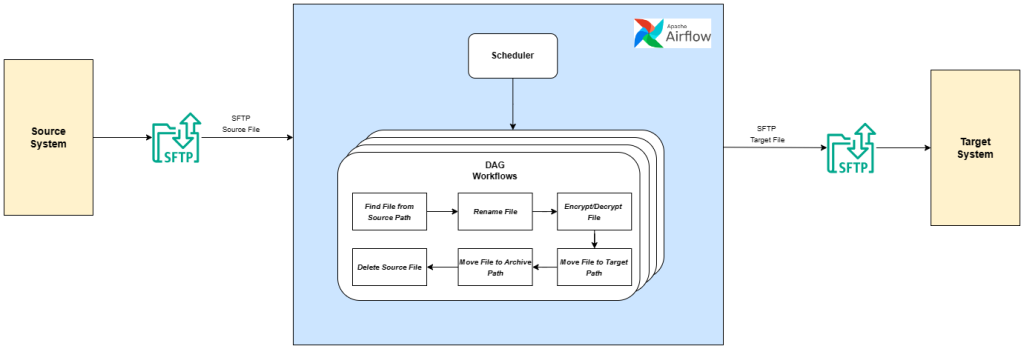

Data Integration using Apache Airflow for file transfers

Apache Airflow is a powerful tool for orchestrating workflows, and it can be effectively used for data integration tasks, specifically file transfers.

It is an open-source platform designed to programmatically author, schedule, and monitor workflows. It's particularly useful for orchestrating complex data pipelines, including file transfers between different systems or storage locations.

Advantages of using Airflow for File Transfers:

- Automation and Orchestration:Airflow automates file transfer tasks, eliminating the need for manual intervention and ensuring consistent execution.

- Scalability:Airflow scales efficiently to handle large volumes of data transfers. You can easily add new tasks or modify existing ones to adapt to changing data pipelines.

- Error Handling and Retries:Airflow allows you to define retries and error handling mechanisms for failed file transfer tasks. This ensures data integrity and smooth operation of your pipelines.

- Monitoring and Logging:Airflow provides a centralized view of your data pipelines, allowing you to monitor their progress and troubleshoot any issues.

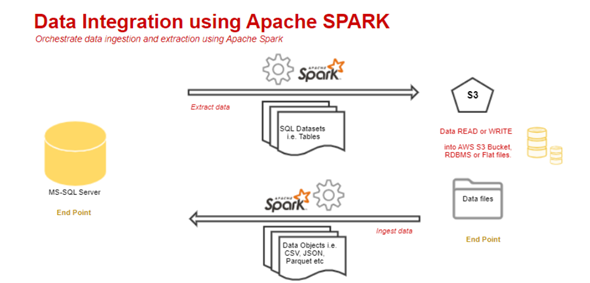

Data Integration using Apache Spark across diverse data sources

Data integration between different data sources using Apache Spark involves leveraging Spark's distributed computing capabilities to efficiently read data from multiple data sources, perform transformations, and write the integrated data back to the targets. It is a powerful tool for working with big data.

Benefits of using Spark for data integration

- Scalability: Handles large datasets efficiently by distributing processing across a cluster.

- Performance: Offers significant speed improvements compared to traditional ETL tools.

- UnifiedPlatform: Provides a single environment for working with data from multiple sources.

Sampath Kumar Sabbineni (Sam) began his career as a software developer and gained valuable experience in various roles at prominent MNC’s such as Deloitte, Capgemini, and Amway over a span of 13 years. In 2016, he embarked on his entrepreneurial journey by joining Kraft Software Solutions in Kuala Lumpur, Malaysia, and has since expanded globally as a VKraft Software Services with branches in Singapore, India, Indonesia, and The USA. Sam provides a range of innovative Digital Solutions addressing challenges in the Digital Era. His vision is to lead at the forefront of the digital platform, offering products with unique functionalities across diverse sectors, including Healthcare, Insurance, Banking, Retail, Logistic, Environmental, and Manufacturing.

Sampath Kumar Sabbineni (Sam) began his career as a software developer and gained valuable experience in various roles at prominent MNC’s such as Deloitte, Capgemini, and Amway over a span of 13 years. In 2016, he embarked on his entrepreneurial journey by joining Kraft Software Solutions in Kuala Lumpur, Malaysia, and has since expanded globally as a VKraft Software Services with branches in Singapore, India, Indonesia, and The USA. Sam provides a range of innovative Digital Solutions addressing challenges in the Digital Era. His vision is to lead at the forefront of the digital platform, offering products with unique functionalities across diverse sectors, including Healthcare, Insurance, Banking, Retail, Logistic, Environmental, and Manufacturing.